Translate this page into:

Computer assisted Objective structured clinical examination versus Objective structured clinical examination in assessment of Dermatology undergraduate students

Correspondence Address:

Chander Grover

Department of Dermatology and STD, University College of Medical Sciences and GTB Hospital, New Delhi - 110 095

India

| How to cite this article: Chaudhary R, Grover C, Bhattacharya S N, Sharma A. Computer assisted Objective structured clinical examination versus Objective structured clinical examination in assessment of Dermatology undergraduate students. Indian J Dermatol Venereol Leprol 2017;83:448-452 |

Abstract

Background: The assessment of dermatology undergraduates is being done through computer assisted objective structured clinical examination at our institution for the last 4 years. We attempted to compare objective structured clinical examination (OSCE) and computer assisted objective structured clinical examination (CA-OSCE) as assessment tools.Aim: To assess the relative effectiveness of CA-OSCE and OSCE as assessment tools for undergraduate dermatology trainees.

Methods: Students underwent CA-OSCE as well as OSCE-based evaluation of equal weightage as an end of posting assessment. The attendance as well as the marks in both the examination formats were meticulously recorded and statistically analyzed using SPSS version 20.0. Intercooled Stata V9.0 was used to assess the reliability and internal consistency of the examinations conducted. Feedback from both students and examiners was also recorded.

Results: The mean attendance for the study group was 77% ± 12.0%. The average score on CA- OSCE and OSCE was 47.4% ± 19.8% and 53.5% ± 18%, respectively. These scores showed a mutually positive correlation, with Spearman's coefficient being 0.593. Spearman's rank correlation coefficient between attendance scores and assessment score was 0.485 for OSCE and 0.451 for CA-OSCE. The Cronbach's alpha coefficient for all the tests ranged from 0.76 to 0.87 indicating high reliability.

Limitations: The comparison was based on a single batch of 139 students. Such an evaluation on more students in larger number of batches over successive years could help throw more light on the subject.

Conclusions: Computer assisted objective structured clinical examination was found to be a valid, reliable and effective format for dermatology assessment, being rated as the preferred format by examiners.

Introduction

OSCE-based assessment of the clinical skills of medical students was first introduced by Harden and Gleeson in 1979.[1] Since then, its validity and reliability in evaluating clinical skills of medical students has been proven in numerous studies, across various subjects.[2],[3],[4],[5],[6],[7] Over the years, many modifications of the OSCE format such as group OSCE,[8] objective structured long examination record [9] and objective structured video examination [10] have been introduced. CA-OSCE was a modification introduced and evaluated in dermatology in 2012 by our institution.[11] Its validity and reliability as an assessment method has also been established.[11],[12]

The essential difference between OSCE and CA-OSCE is the presence of real patients versus “virtual” patients (computer-based presentation of dermatoses). Apprehensions have been cast that validity of CA-OSCE may be low as students are trained to examine real patients, and testing them on standard computer images may not be a true measure of assessment. However, various studies have found a good correlation of CA-OSCE scores with those on conventional clinical examination, thus validating this as an assessment tool.[12],[13] The present study was aimed at assessing the relative effectiveness of OSCE and CA-OSCE as assessment tools for undergraduate dermatology trainees.

Methods

This cross-sectional study involved 6th semester MBBS students undergoing 40-day clinical posting (as mandated by the Medical Council of India) in the department of dermatology, University College of Medical Sciences and Guru Teg Bahadur Hospital, New Delhi.[14] The students were posted in four batches on a rotational basis from January to June 2015. An end of posting assessment based on CA-OSCE format is being conducted in our institution for the last 4 years. For the purpose of this study, the students were also subjected to conventional OSCE simultaneously.

At the beginning of the posting, an introductory communication about the modified method of assessment was given to the batch. A meticulous record of their attendance was maintained. At the end of posting, all students underwent both CA-OSCE (50 marks) and OSCE (50 marks) based evaluations, and marks scored in both the formats were recorded. Both examinations were designed on identical formats, carried equal weightage and were aimed at evaluating students' knowledge and clinical skills. The examination was conducted in an institutional lecture hall offering comfortable seating for forty students and a good projection facility for the purpose of CA-OSCE. An antechamber was used for conducting OSCE, having stations with real patients.

The examination was conducted in two phases; Phase 1 of the evaluation was CA-OSCE (ten questions) while Phase 2 was in OSCE format having ten stations, with each student given 2 minutes per station. Preset instructions were given before each part of the examination. Feedback from individual students and examiners was collected thereafter, by means of a questionnaire designed for the purpose.

- Phase 1 (CA-OSCE): A Microsoft PowerPoint ® designed evaluation, consisting of ten questions of equal weightage. Each question was in the form of a clinical problem with relevant clinical details and representative clinical image (two slides), followed by a set of questions pertaining to identification of dermatoses, its causation, associations, relevant investigations or management issues (five slides). Subsequently, the questions, which were of short answer type were projected. They pertained to the “must know” topics in dermatology as preordained in the university curriculum. The slides were designed to move in an automated manner at predetermined, standard intervals and it was not possible to navigate back. Phase 1 was completed in 35 minutes

- Phase 2 (OSCE): This consisted of ten clinical stations, carrying equal weightage. The question format was kept identical to that of CA-OSCE to ensure comparability; the only difference being that instead of clinical images, real or simulated patients were used for evaluation. Each station was allotted a uniform period of 2 minutes, and students were required to physically move between the ten stations. One of the stations had a faculty member acting as a simulated patient and evaluated the students on the affective domain, while another had a commonly used drug or drug kit being used, with relevant listed questions. The remaining stations had real patients, who had to be examined and then the relevant short answer questions answered in a sheet. For a batch of forty students, it took approximately 100 minutes to complete Phase 2.

Following this, the exiting students were required to fill up an anonymous questionnaire seeking their feedback regarding both the examination patterns. Faculty members conducting/invigilating both the phases were also asked to enter their subjective feedback.

The attendance, as well as the marks in both the examination formats were meticulously recorded and statistically analyzed using SPSS version 20.0. (IBM Corp. Released 2011. IBM SPSS Statistics for Windows, Version 20.0. IBM Corp., Armonk, NY, USA). The liability and internal consistency of both the formats was tested by calculating Cronbach's alpha coefficient using Intercooled StataV9.0. (StataCorp., 2005., Stata Statistical Software: Release 9. StataCorp. LP, College Station, TX, USA).

Results

The batch comprised 156 students. After excluding the students on sick leave, those not regularly attending the posting due to supplementary examinations and those who did not appear for their final assessment, a total of 139 medical students were included in the final analysis.

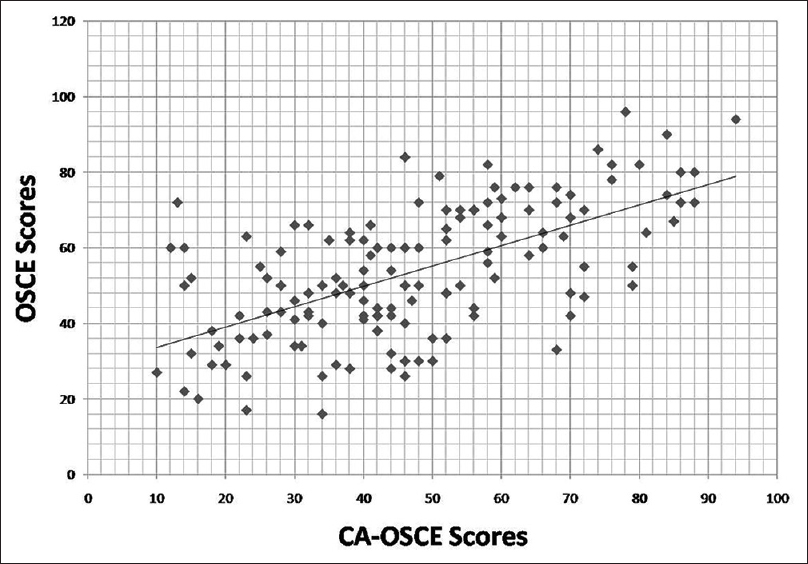

The mean attendance for the study group (n = 139) was 77% ± 12.0% (range, 23%–98%). The average score of the students on CA-OSCE format was 47.4% ± 19.8% (range, 10%–94%) while on OSCE format it was 53.5% ± 18% (range, 2%–96%). It was seen that the students' scores on both the examination patterns had a significant positive correlation, (Spearman's coefficient = 0.593, correlation considered significant at the 0.01 level) [Figure - 1].

|

| Figure 1: Correlation between the two different assessment methods (*Spearman's coefficient = 0.593, correlation being taken significant at the 0.01 level) |

Spearman's rank correlation coefficient between attendance scores and the assessment score was 0.485 for OSCE and 0.451 for CA-OSCE showing that the performance of students in both the formats significantly correlated with their attendance as well [Table - 1] and [Figure - 2].

|

| Figure 2: Correlation between the attendance scores of the students and their marks on both the formats |

The students were further divided into two groups based on an attendance cut-off of 75% (the minimum essential attendance as mandated by the Medical Council of India).[14] A total of 89 students (64.1%) recorded >75% attendance (Group 1); while 50 students (35.9%) recorded <75% attendance (Group 2). The mean scores on OSCE and CA-OSCE of these two student groups are summarized in [Table - 2].

It can be seen that the mean assessment scores of Group 1 students were higher than those of Group 2 in both the examination formats. The Spearman's Rho for nonparametric data also shows that correlation between the scores on CA-OSCE and OSCE was better for the Group 1 students (attending >75% classes) as compared to those attending lesser number of classes.

The Cronbach's alpha coefficient for both the examinations was consistently high [Table - 3] thereby proving the validity and reliability of both methods of evaluation.

The feedback from students was collected by means of a questionnaire [Table - 4]. The students graded their responses on a 3-point Likert scale (not prevalidated). Majority of the students rated OSCE as a better means of evaluation. Out of 139 students, a total of 102 students (73.4%) Found it easier to attempt an examination by viewing real dermatoses than by viewing clinical images. Sixty-eight students (48.9%) said that the time allotted per question was sufficient in OSCE but not in CA-OSCE; however, 26 of them (18.7%) were indecisive. On the contrary, though, 97 (69.8%) rated CA-OSCE as a systematic (less chaotic) examination pattern as compared to OSCE. When asked to choose, 86 (61.9%) students chose OSCE to be retained as the mode of assessment in future.

Examiners' subjective feedback was collected as an open-ended questionnaire. It showed that although both the examination patterns covered relevant topics well; CA-OSCE seemed less chaotic and easier to manage. Chances of cheating were negligible. Majority of the faculty were in favor of CA-OSCE as the mode of future assessments.

Discussion

In medical education, OSCE has time and again been proven to be a valid and reliable assessment method.[2],[3],[4],[5],[6],[7] A properly designed OSCE is a great tool to assess students' knowledge and clinical competence.[15] It covers all the levels of Miller's pyramid and hence constitutes a valid examination.[1] At the same time, the traditional multistation OSCE format suffers from shortcomings such as excessive requirement of time, resources and workforce.[11],[12]

In recent times, CA-OSCE has been tried to overcome these shortcomings.[11],[12],[13] The time required to set up multiple stations is saved, and limitations such as patient non-cooperation and observer fatigue are bypassed. Dermatology departments usually face shortage of faculty; hence, CA-OSCE appears to be an effective and innovative assessment tool. We had introduced this format in our institution 4 years ago and it has been accepted well by both students and faculty.[11] Our institution had pioneered the replacement of traditional, theoretical, essay type questions being used previously with CA-OSCE in the year 2010.[11] The change was aimed at evaluating the higher cognitive skills of the students instead of purely theoretical knowledge. As “assessment drives learning,” it was seen that the change in assessment pattern led to an improved attendance as well.[11] However, it fell short in assessing the psychomotor and affective domains. In a subsequent analysis, Kaliyadan et al. found a good correlation between CA-OSCE scores and overall academic performance of the students.[12] The big question still remains if CA-OSCE can completely replace the more exhaustive OSCE.

From an operational point of view, the study highlights certain basic differences in the examination formats. Based on the findings of the study, [Table - 5] summarizes an overall comparative evaluation of both the examination patterns.

The results showed that students tended to score slightly better in OSCE as compared to CA-OSCE. This may be attributed to multiple factors. The more obvious explanation could be that the students were able to identify the dermatoses better in real patients than in clinical images. However, on close scrutiny, it was found that there could be other factors at work. In an OSCE, they reportedly got sufficient time to answer and thus could go back to a previous question in case they changed their mind upon seeing further questions. Admittedly, there were much better prospects of cheating too, because of the relatively chaotic atmosphere. Students tend to intermingle and discuss answers in an OSCE setting. Furthermore, with OSCE, the subjective bias of the examiner comes into play as the examiner could be sympathetic and award better marks. Previous studies have proved the validity of CA-OSCE in dermatology and shown a good correlation with the traditional evaluation system and overall academic performance of a student.[12],[13] Our study proved its effectiveness in comparison to OSCE as well.

When asked to choose, more number of students overall preferred OSCE over CA-OSCE; however, on individual points, the latter had more acceptance among students as well as faculty. Students found this system less chaotic and more uniform. All the required instructions are given to the whole batch simultaneously, avoiding any unnecessary confusion. The system was also less stressful for both examiners and examinees. We perceived that time constraints bothered many students; hence, in the future CA-OSCE could incorporate time-out or a timed rest interval after a some questions, so that students can go back and make changes in their answers, or attempt left out questions if required.[13]

Our findings also show that in both of these formats, the scores directly correlate with the students' attendance. This implies that the students who attend clinics regularly were able to score better in both the formats. They were better equipped to identify dermatoses and also tackle clinical management-related issues. Thus, both examination patterns reflected high validity, i.e., both patterns could assess true clinical knowledge. There was a marginally better correlation of OSCE scores with attendance as compared to CA-OSCE scores; however, this difference was not statistically significant. The assessment scores of both the examination patterns were mutually correlated, and it was found that a well-read, regular student performed equally well in both the patterns.

Limitations

An ideal examination format should be able to assess all three domains of learning (cognitive, psychomotor and affective). CA-OSCE has limitations as it can assess only cognitive domain (though more objectively than standard written exams). OSCE stations having a simulated patient (a faculty member) enabled us to assess affective domain; however, psychomotor skill evaluation could still not be done as that would require stations asking students to perform a bedside test or procedure. Our resources did not permit this, but future studies can attempt to include such stations. Furthermore, ours was a cross-sectional study involving a single undergraduate batch; future longitudinal studies with multiple batches can throw more light on the subject.

Conclusions

OSCE is an effective assessment method in medical education, owing to its ability to test all domains of learning (cognitive, psychomotor and affective) simultaneously and objectively. However, our study found CA-OSCE to be an equally effective tool in assessing dermatology undergraduate trainees, and this could be the technique of choice for examiners in the setting of limited faculty and limited resources. Further acceptability can be improved by small, simple measures such as ensuring good quality images and incorporating rest intervals. It would be useful to incorporate innovations for assessment of psychomotor and affective domains within this system, by designing hybrid formats (limited station OSCE with CA-OSCE). Further studies with larger sample sizes can help in improving the effectiveness of these formats.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

| 1. |

Harden RM, Gleeson FA. Assessment of clinical competence using and objective structured clinical examination (OSCE). Med Educ 1979;13:41-54.

[Google Scholar]

|

| 2. |

Cohen R, Rothman AI, Poldre P, Ross J. Validity and generalizability of global ratings in an objective structured clinical examination. Acad Med 1991;66:545-8.

[Google Scholar]

|

| 3. |

Fowell SL, Bligh JG. Recent developments in assessing medical students. Postgrad Med J 1998;74:18-24.

[Google Scholar]

|

| 4. |

Hodges B, Hanson M, McNaughton N, Regehr G; University of Toronto Psychiatric Skills Assessment Project. Creating, monitoring, and improving a psychiatry OSCE: A guide for faculty. Acad Psychiatry 2002;26:134-61.

[Google Scholar]

|

| 5. |

Almoallim H. (OSCE) in Internal medicine for undergraduate students newly encountered with clinical training. Med Ed Portal 2006;2:429.

[Google Scholar]

|

| 6. |

Vaidya NA. Psychiatry clerkship objective structured clinical examination is here to stay. Acad Psychiatry 2008;32:177-9.

[Google Scholar]

|

| 7. |

Amr M, Raddad D, Afifi Z. Objective structured clinical examination (OSCE) during psychiatry clerkship in a Saudi university. Arab J Psychiatry 2012;23:69-73.

[Google Scholar]

|

| 8. |

Biran LA. Self-assessment and learning through GOSCE (group objective structured clinical examination). Med Educ 1991;25:475-9.

[Google Scholar]

|

| 9. |

Cookson J, Crossley J, Fagan G, McKendree J, Mohsen A. A final clinical examination using a sequential design to improve cost-effectiveness. Med Educ 2011;45:741-7.

[Google Scholar]

|

| 10. |

Zartman RR, McWhorter AG, Seale NS, Boone WJ. Using OSCE-based evaluation: Curricular impact over time. J Dent Educ 2002;66:1323-30.

[Google Scholar]

|

| 11. |

Grover C, Bhattacharya SN, Pandhi D, Singal A, Kumar P. Computer assisted objective structured clinical examination: A useful tool for dermatology undergraduate assessment. Indian J Dermatol Venereol Leprol 2012;78:519.

[Google Scholar]

|

| 12. |

Kaliyadan F, Khan AS, Kuruvilla J, Feroze K. Validation of a computer based objective structured clinical examination in the assessment of undergraduate dermatology courses. Indian J DermatolVenereolLeprol 2014;80:134-6.

[Google Scholar]

|

| 13. |

Holyfield LJ, Bolin KA, Rankin KV, Shulman JD, Jones DL, Eden BD. Use of computer technology to modify objective structured clinical examinations. J Dent Educ 2005;69:1133-6.

[Google Scholar]

|

| 14. |

Medical Council of India. Salient Features of Regulations on Graduate Medical Education, 1997. Published In Part III, Section 4 of the Gazette of India Dated; 17th May 1997. Available from: http://www.mciindia.org/know/rules/rules_mbbs.html. [Last accessed on 2011 Nov 14].

[Google Scholar]

|

| 15. |

Gupta P, Dewan P, Singh T. Objective structured clinical examination (OSCE) revisited. Indian Pediatr 2010;47:911-20.

[Google Scholar]

|

Fulltext Views

1,956

PDF downloads

1,250